vim

-

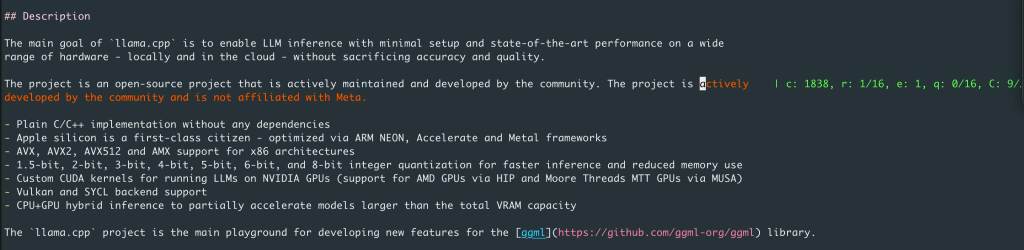

Supercharge Your Vim Editor Workflow with Local LLMs: Introducing llama.vim

Imagine if your Vim editor could think alongside you—generating code, explaining snippets, and refining text—all without ever leaving your terminal. In this article, we dive into how you can supercharge Vim with local, secure, and cost-free AI, harnessing the power of open-source plugins and LLMs. Continue reading

Recent Posts

- Real-Time Speech-to-Speech with OpenAI & Twilio: Full SIP Integration Guide

- Step-by-Step Guide to SIPp : Build and Install from Source

- Explore KittenTTS with Gradio: Easy Text-to-Speech

- Janus Installation and Echo Test: A Complete Walkthrough

- Chat with Your Kubernetes Cluster Locally: Setting up kubectl-ai with Ollama