llama3

-

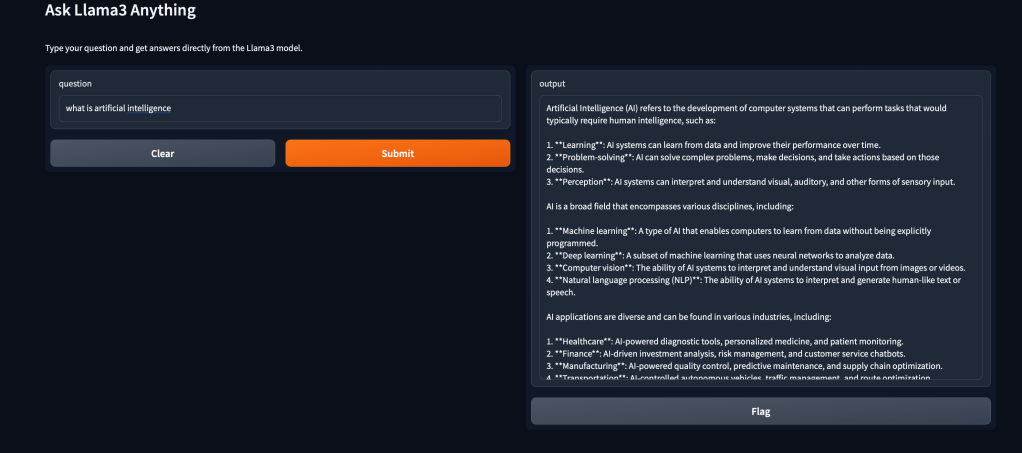

Run Deepseek-r1 and Phi4 Locally with Gradio and Ollama

Explore the power of AI on your own terms by running Llama3 and Phi3 models locally with Gradio and Ollama. This guide will show you how to harness these models in a Python environment, ensuring privacy, reduced latency, and complete control over your data. Whether you’re a developer or a tech enthusiast, learn how to… Continue reading

Recent Posts

- Real-Time Speech-to-Speech with OpenAI & Twilio: Full SIP Integration Guide

- Step-by-Step Guide to SIPp : Build and Install from Source

- Explore KittenTTS with Gradio: Easy Text-to-Speech

- Janus Installation and Echo Test: A Complete Walkthrough

- Chat with Your Kubernetes Cluster Locally: Setting up kubectl-ai with Ollama